Unfortunately for my father, this is not a blog about Masters of Laws, but rather about Large Language Models, or LLMs, which have been the driving force behind some of the recent advancement in Generative AI. If you have been even remotely online for the past several months, you have likely seen generative ai that creates images like this:

Or text like this:

Suffice it to say, Generative AI has become all of the rage. The current thing / meme among very online VCs is that in order to get funded, all you need to do is change you url to a .ai address, and the money will flow in. When startup ideas start getting memed like that, it’s worth doing two things: (1) keeping a keen eye on what is being funded and why and (2) remaining optimistically skeptical. Which is to say, trust, but verify almost everything you hear and be happy when your misgivings are proven incorrect and don’t gloat when they aren’t.

Unlike a lot of startup hype cycles, artificial intelligence and machine learning have already gone through multiple, decades-long “winters” - so much so that the sector’s winter’s even have their own wikipedia page. So it’s not as if this is brand new technology that is completely untested. Various forms of AI have been deployed into the real world in large, enterprise use cases. Not only that, but large research institutions have machine learning and artificial intelligence research labs and have a plethora of patents on the subject. Real, smart, credentialed people think this is a real, smart problem worth working on. While it might not be all of the way there yet, it’s incrementally getting better and incrementally getting more useful. Not only that, but it falls under one my favorite frameworks of predicting tech, in that the use cases are pretty clear and the roadmap makes sense at this point.

I have written about AI broadly in the past. Ironically, that post, which was dated September 2019, started with the line: In the tech startup world, it seems like all you ever hear about these days is artificial intelligence and machine learning. As the saying goes, the more things change, the more they stay the same. But there are two excerpts that still remain largely true when I think about AI / Machine Learning:

Pattern Recognition. One of the cool things about being a human is our innate ability to recognize patterns. Pattern recognition is one of the key cognitive abilities that separates humans from other animals. Machine Learning, in turn, is the act of trying to replicate that pattern recognition ability, but in a scalable manner. Recognizing a pattern means fitting an equation into data - you already have to have a solid understanding of the results from the past before you can build predictive results for the future.

Now is the Time. Neural Networks are basically super-sized predictive models. Models are larger than they used to be because they can be - we have more robust data sets and more computing power to quickly solve modeled formulas now than ever before. More things are being tracked than ever, so we have more data points. All of this data can be stored efficiently on the cloud and computing power is finally at a point where it can keep pace with predictive analytics on a super-sized scale.

How Does It Work?

LLMs work because of pattern matching - to radically oversimplify the matter, it’s just math. Massive amounts of text are coalesced into data warehouses, which are then fed through models that collate this text and associate words with one another, providing numerical values for each word based on where they fit within a certain knowledge graph. These numeric values and associations create a probability that these words will be preceded and followed by other words. For example a word like fox might have a 27% chance of being followed by the word jumped. These probabilities and statistics are then fed through extremely complex models, which relate to other correlations in words, so that, using the prior example, a model would know that a quick fox might jump, but an injured fox, is less likely to take that action. When combined, these models are capable of generating text based on these assumptions and a variety of inputs.

There are likely maching learning PhD’s who could write their dissertations on every single word I just used in the last paragraph, so I massively oversimplified a very complicated subject that I barely understand, but the punchline is that math turns words into numbers and then can predict how those numbers fit together using more math. All words and word combinations, and phrases, and sentence structures and so forth then become a function of the numerical representations that these words correlate with. These numerical representations can then do things like answer questions, create stories, write poetry because LLMs know what all of that looks like in their system and can try to recreate it using their own models.

Almost everyone on the planet has utilized some version of this math at some point in their lives. Autofill and autocorrect on email clients and phone text messaging apps are extremely prevalent and have been around for a while. However, in 2022 LLMs have become so robust that their output has gone from guessing the next word in a sentence, to producing 1,000 word responses to hyper specific prompts (as seen above). In the next generation of LLMs (like GPT-4), they could potentially be able to 60,000 word novel (maybe better to call that a novella, but still) from a single prompt. This is because the scale of the data and the size of the models are going to increase significantly.

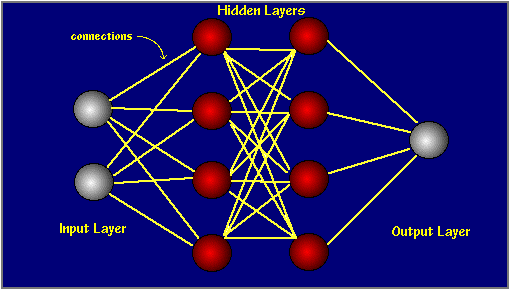

But it’s not just about the data, it’s about the size of the models as well. These models are sometimes referred to as neural networks. Neural network are the way that parameters for models are structured. They are effectively large interconnected nodes of logic that help drive the output of various inputs. So each node creates an if statement - if these words are used together, than these other words should also go together. In GPT-3, they have 175 billion parameters. This means that not only do they have a ton of data, but they are constantly making 175 billion separate decisions on all of this data simultaneously. So when I say extremely complicated if statements that’s what I mean.

Why Now?

The transformer model type is a new way of developing neural networks that requires minimal tagging and labeling. Five years ago, basically all LLMs and NLP models utilized model structures that required linear processing and significant upfront data labeling. Transformers (the type of model), are structured such that significantly less data labeling is required and they process all of the information in a model at the same time. So instead of reading a question left to right and missing key context that shows up later in the sentence structure, transformers have all of the context upfront. This allows them to process data much more quickly and as a result, able to output data more quickly as well, as well as increase the size of the model.

The other important factor are the advancements in scaling laws. In general statistical analysis, the general thought process for a long time has been that using too much data and too many parameters in a model is a big issue. In other words, too much information in the model can break it because it becomes overcomplicated. What recent breakthroughs in LLMs have shown (specifically GPT-3), is that as you increase the size of the model, it actually gets better at providing results. At this point larger and larger models fundamentally work better. This might hit a wall at some point, but for now, that wall hasn’t come yet.

Additionally, general theories of advancements in software still hold true for LLMs and generative ai. We have more data, we have faster software, we have better compute power, more people are interested the topic, Moore’s Law, etc. The AI space is an ongoing iterative industry - as more folks get interested in it, more advancement occur, which drive more folks to being interested in the space, and so forth.

So, while the the term “AI” is really something that has been around forever and the goalposts on it are constantly moving, generative AI and LLMs are the most recent breakthrough that is likely going to make a significant impact. Pretty soon, the stuff that very advanced LLMs are doing today will seem commonplace and might even lose the term “AI” altogether. It’s not about the industry or the sector, it’s about what the technology is able to actually accomplish.

Implications on Businesses

It’s very difficult to project out exactly what kind of uses cases will be the most utilized for technology like this. This is true of really any sorts of shifts in how things are done, sometimes it takes people a while to catch up.

Take, for example, the NBA 3-point line. When it was first introduced by the ABA in 1967, it was certainly seen as a gimmick, so much so that the NBA didn’t have one. When asked about the revolutionary concept, early commissioner of the ABA, George Mikan said: “We called it the home run, because the 3-pointer was exactly that. It brought fans out of their seats.” It was this sort of innovative thinking that made the ABA popular enough amongst fans that they eventually merged with the NBA. Even after the ABA was merged, it still took the the NBA several years before accepting the 3-pointer it into the fold. And even still, it took the NBA years before the 3-point line radically transformed the way the game was played. Players still treated it as a gimmick for a long time. Now, we live in world where the three-pointer is a fundamental part of any team’s strategy, radically different from several decades ago. It just people a while to figure it out.

Much like the ABA’s 3-pointer, people are still figuring out how to most effectively utilize LLMs. There are tons of use cases for something like this however, so early signs of what kind of impact this will create are enormous. There have been hundreds of startups launched in the generative AI space, and thousands in the artificial intelligence sector writ-large. Some startups are writing code based on simple prompts (the future of no-code is not going to be drag and drop anymore), while others are helping marketing organizations write copy, and still more are creating more sophisticated customer support focused chatbots. There are even folks in this space who are tackling the search function, trying to use this technology to take down one of tech’s previously most unassailable giants, Google. The list of use cases is nearly boundless (wait for next week’s newsletter to learn more).

But it’s not just about the startups in this space, but the downstream impacts that these companies will have on the rest of the economy. This should be a force for significant productivity enhancements (imagine cutting time spent on replying to emails by half) as well as creativity enhancements. Until the photo camera was invented, artists largely focused on trying to replicate the real world as much as possible. Once technology could do that more effectively than a person, artists became much more creative and produced more unique art.

Whenever there is a technology shift like this, the friction that occurs in the marketplace allows for new players to take advantage of it. Think about the first journalists who were able to more effectively take advantage of the Twitter network effects. Or the ecommerce brand who understood SEO better than its peers and was able to gain outsized market-share. Or the discount retailer who took over the world by embracing technology (not the one you are thinking of). The same thing is poised to occur once folks really start to understand this technology and deploy it across a number of fields.

Of course, there are going to be fits and starts, as well as a big graveyard of generative AI companies powered by LLMs. It’s going to get a little uglier before the picture becomes clearer. But that’s just the general cycle of technology like this.